Programming 3.0

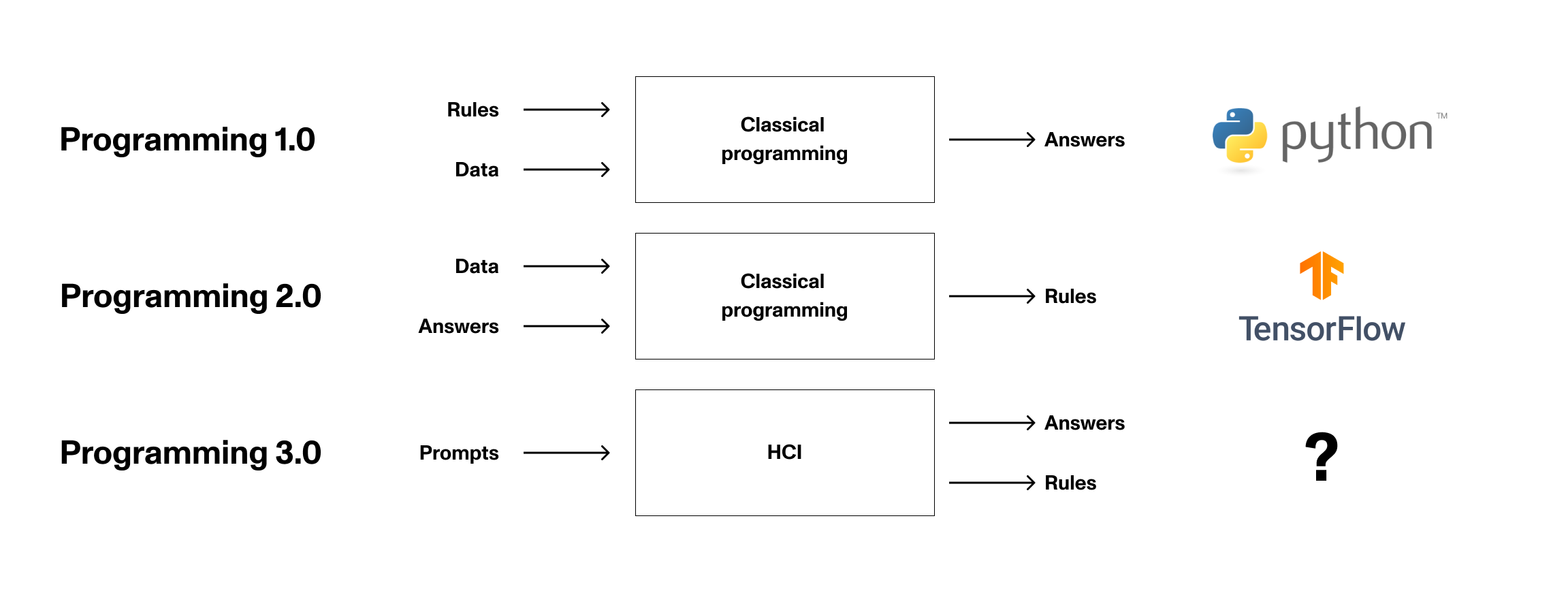

The first generation of programming, programming 1.0, was based on the idea that we can use machines to automate repetitive tasks. The history of modern software can be traced back to the mid-1900s when early computers were used to calculate ballistic missile trajectories for wartime activities. Programming 1.0 is based on the idea of explicit languages and rules that allow developers to crystallize ideas into a structured form.

The next generation of programming, programming 2.0, is built on the assumption that programming languages exist. Programming 2.0 uses machine learning to find patterns in data that are otherwise difficult for humans to manually define. This revolution was kicked off with the release of ImageNet and the realization that large labeled datasets can effectively train neural networks.

After using GPT, I’m increasingly convinced that we’re seeing the birth of programming 3.0. Before I explain why, it’s important to note that new paradigms in technology are often built on the implicit assumption that the previous layer in the stack exists. We’ve been in the “machine learning” era since ~2014, and while there is still plenty of business opportunity for both programming 1.0 and 2.0, this new model of human-computer interactions feels totally different.

Programming 3.0 is built on the assumption that trained models exist. Instead of requiring humans to write code or design datasets, programmers will become computer psychologists. Their specialty will be in the manipulation of prompts that coax out useful answers from extremely large models trained on huge datasets.

As with any new model, there are plenty of questions to ask. Questions like:

- What types of models are best for answering certain types of questions?

- What prompts elicit useful responses from large models? Can we use them to answer unsolved questions in physics? What about philosophy?

- When is the right time to train a new model versus using an existing pre-trained model?

- What types of frameworks will get built to simplify prompt asking? What is the Tensorflow equivalent for programming 3.0?

I don’t know the answers to these questions yet, but I’m going to keep asking. As we see more models emerge with the abilities of GPT, don’t be surprised if the ability to talk to them becomes an important skill in the workforce.

Sunday Scaries is a newsletter that answers simple questions with surprising answers. The author of this publication is currently living from his car and traveling across the United States. You can subscribe by clicking the link below. 👇

If you enjoyed this issue of Sunday Scaries, please consider sharing it with a friend. The best way to help support this publication is to spread the word.